Unlocking the Power of Machine Learning: A Journey into the World of Artificial Intelligence.

Awarm welcome to all the avid readers and passionate learners who have embarked on this exciting journey into the realm of Machine Learning! Your presence here is truly appreciated, and I’m thrilled to have you join us on this exploration of the fascinating world of ML and its myriad use cases.

So without delaying any further, let’s embark on this adventure together and unlock the immense potential of Machine Learning!

![]()

Machine Learning (ML) is a subset of artificial intelligence (AI) that focuses on developing algorithms and models that enable computers to learn and make predictions or decisions without being explicitly programmed. It is a data-driven approach that allows machines to analyze and interpret complex patterns and relationships in data, extracting meaningful insights and making accurate predictions.

To put it more simply,

Machine Learning is a part of Artificial Intelligence where computers are taught to learn and make predictions or decisions on their own. What’s fascinating about it is that they can do this without being told exactly what to do at every step. Instead, they learn from data and figure things out by themselves!

Here’s an example😸🐈⬛

Let’s say you want the computer to recognize pictures of cats. Instead of giving it a long list of rules like “look for pointy ears” or “check for a tail,” you would show it lots of pictures of cats and tell it, “These are cats.” The computer would then analyze those pictures, find common patterns, and learn what makes a cat a cat. Later, when you show it a new picture, it can tell you whether it’s a cat or not based on what it learned before.

ML is like teaching a computer to learn from examples and use that knowledge to make smart decisions or predictions. It’s like having a super-smart assistant that can analyze large amounts of information and find hidden patterns. And that’s why ML is so important and exciting — it allows computers to solve complex problems and make accurate predictions in ways we never thought possible before.

Key Concepts in Machine Learning:

1. Data: Machine learning algorithms rely on vast amounts of data to learn and make predictions. The quality and quantity of data play a crucial role in the accuracy and effectiveness of ML models.

2. Training: ML models are trained using labeled data, where the desired output or prediction is already known. During the training phase, the model learns patterns and relationships in the data to generalize and make predictions on new, unseen data.

3. Features: Features are specific attributes or characteristics of the data that the ML model uses to make predictions. Identifying and selecting relevant features is a critical step in the ML process, as it directly impacts the model’s performance.

4. Algorithms: Machine learning algorithms form the core of the ML process. They are mathematical models that process data, learn patterns, and make predictions. Examples of popular ML algorithms include linear regression, decision trees, support vector machines, and neural networks.

5. Evaluation and Testing: ML models are evaluated using testing data to measure their performance and accuracy. Various metrics, such as accuracy, precision, recall, and F1 score, are used to assess the model’s effectiveness and identify areas for improvement.

Before getting started with Machine Learning, it’s helpful to familiarize yourself with the following keywords and concepts:

1. Artificial Intelligence (AI): The broad field of computer science that focuses on creating intelligent machines that can mimic human cognitive abilities.

2. Supervised Learning: A type of ML where the algorithm learns from labeled data, where the desired output is already known, to make predictions or classify new, unseen data.

3. Unsupervised Learning: ML algorithms that analyze unlabeled data, identifying patterns and relationships without specific pre-defined outputs, often used for clustering or dimensionality reduction.

4. Reinforcement Learning: A type of ML where an agent learns to interact with an environment by taking actions and receiving feedback in the form of rewards or punishments, aiming to maximize long-term rewards.

5. Training Data: The labeled dataset used to train ML models, consisting of input features and corresponding desired outputs or labels.

6. Testing Data: A separate dataset used to evaluate the performance of a trained ML model, providing an independent measure of its accuracy and generalization capabilities.

7. Feature Engineering: The process of selecting, transforming, or creating relevant features from the available data to improve the performance of ML models.

8. Model Evaluation Metrics: Quantitative measures used to assess the performance of ML models, such as accuracy, precision, recall, F1 score, and area under the curve (AUC).

9. Overfitting and Underfitting: Overfitting occurs when a ML model performs well on the training data but fails to generalize to new, unseen data. Underfitting, on the other hand, refers to a model’s inability to capture the underlying patterns in the data.

10. Cross-validation: A technique to assess the performance and generalization ability of ML models by splitting the available data into multiple subsets for training and testing, allowing for a more robust evaluation.

11. Bias and Variance Trade-off: The balance between a model’s ability to capture the true underlying patterns (low bias) and its sensitivity to fluctuations or noise in the data (high variance).

12. Hyperparameters: Parameters that are not learned by the ML model itself but are set by the user before training. Examples include learning rate, regularization strength, or the number of hidden layers in a neural network.

13. Ensemble Learning: Combining multiple ML models to make predictions or decisions, often resulting in improved performance and generalization.

14. Deep Learning: A subset of ML that focuses on training deep neural networks with multiple layers to learn hierarchical representations of data, often used for complex tasks such as image recognition and natural language processing.

15. Deployment: The process of implementing a trained ML model into a production environment to make real-time predictions or decisions.

By familiarizing yourself with these keywords, you will be better equipped to understand and engage with the concepts and discussions surrounding Machine Learning.

Different Methods of Machine Learning

Machine Learning encompasses various methods and algorithms, each designed to tackle different types of problems and data scenarios. Here are some commonly used methods in Machine Learning:

1. Supervised Learning: This method involves training ML models using labeled data, where the desired output is known. The model learns to map input features to their corresponding outputs. Examples of supervised learning algorithms include linear regression, logistic regression, decision trees, support vector machines (SVM), and naive Bayes.

2. Unsupervised Learning: In this method, ML models analyze unlabeled data to find patterns, relationships, or structures within the data. Clustering algorithms, such as K-means and hierarchical clustering, group similar data points together. Dimensionality reduction techniques, such as Principal Component Analysis (PCA) and t-SNE, reduce the number of input features while preserving important information.

3. Reinforcement Learning: This method involves training an agent to interact with an environment and learn optimal actions based on rewards or punishments. The agent learns through trial and error, aiming to maximize long-term rewards. Q-learning and Deep Q-Networks (DQN) are popular reinforcement learning algorithms.

4. Semi-Supervised Learning: This method combines labeled and unlabeled data for training. It leverages the limited labeled data along with the larger unlabeled dataset to improve model performance. This approach is useful when obtaining labeled data is expensive or time-consuming.

5. Transfer Learning: Transfer learning allows pre-trained models, which were trained on large and general datasets, to be reused for new tasks or domains with smaller datasets. The pre-trained model’s knowledge is transferred and fine-tuned on the specific task or domain of interest, saving time and resources.

6. Deep Learning: Deep Learning is a subset of ML that uses deep neural networks to learn hierarchical representations of data. Deep neural networks consist of multiple layers of interconnected nodes (neurons) that learn and extract increasingly complex features from the input data. Convolutional Neural Networks (CNNs) are commonly used for image-related tasks, while Recurrent Neural Networks (RNNs) are useful for sequence-related tasks.

7. Ensemble Learning: Ensemble Learning combines multiple ML models to improve overall performance and generalization. By aggregating predictions from multiple models, Ensemble Learning can reduce biases, lower variance, and enhance robustness. Bagging, Boosting, and Random Forests are popular ensemble learning techniques.

8. Online Learning: Online Learning, also known as incremental or streaming learning, involves updating the ML model continuously as new data becomes available. This method is well-suited for scenarios where data arrives in a continuous stream and requires real-time updates to the model.

9. Neural Networks: Neural Networks are computational models inspired by the structure and functioning of the human brain. They consist of interconnected nodes (neurons) organized into layers, where each neuron performs a simple computation. These networks can learn complex patterns and relationships within the data.

There are two main methods to guide your machine learning model — supervised and unsupervised learning. Depending on what data is available and what question is asked, the algorithm will be trained to generate an outcome using one of these methods.

What is Supervised Learning?

Overview

Let’s dive into the fascinating world of supervised learning.

Supervised learning is a method in Machine Learning where we train a model using labeled data, which consists of input features and their corresponding desired outputs or labels. The goal is to enable the model to learn the underlying patterns and relationships between the features and labels so that it can make accurate predictions or classifications on new, unseen data.

In supervised learning, we have a teacher-like approach, where the model learns from labeled examples and tries to generalize from them. It analyzes the labeled data, identifies patterns, and builds a model that can map input features to their corresponding outputs. This process is known as training.

Once the model is trained, it can take new inputs and predict or classify the desired outputs based on what it learned during training. The model aims to generalize its knowledge to unseen data and make accurate predictions in real-world scenarios.

Categories of Supervised Learning

In supervised learning, we can broadly categorize the types of problems based on the nature of the desired output or label. The two main categories of supervised learning are:

1. Regression: Regression is used when the desired output or label is a continuous numerical value. The goal of regression is to predict or estimate a quantitative value based on the input features. Examples of regression problems include predicting house prices, forecasting stock prices, or estimating the age of a person based on certain characteristics.

2. Classification: Classification is used when the desired output or label belongs to a finite set of categories or classes. The goal of classification is to assign a category or label to the input features. It deals with discrete or categorical outputs. Examples of classification problems include spam email detection (classifying emails as spam or not), sentiment analysis (classifying text as positive, negative, or neutral), or image recognition (classifying images into different object categories).

Let’s understand Regression Algorithm with one easy example.

Fond of Stories??

Then this is exactly for you.

In Machine Learning, regression algorithms are used when we want to predict or estimate a continuous numerical value. It’s like solving a puzzle where we have input features, and our goal is to find the missing piece, which is a numeric value.

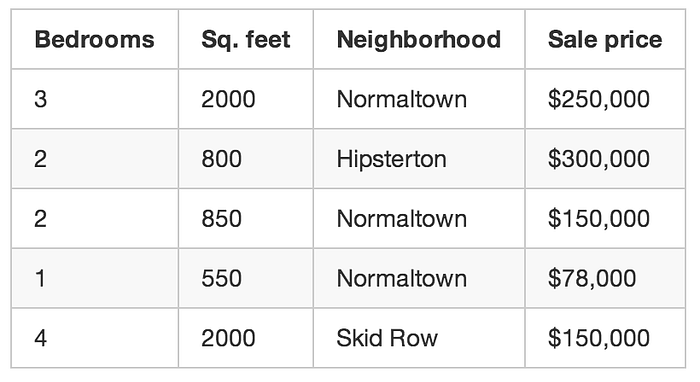

To understand regression, let’s imagine we are trying to predict the price of houses. We have a dataset with various features of different houses, such as the number of bedrooms, square footage, location, and so on. Additionally, we have the actual selling prices of those houses. This data is our labeled data, where the desired output is a continuous numerical value (the house price).

Special Note: The above picture is taken from this blog.

Now, we want to build a regression model that can learn from this labeled data and predict the price of a house based on its features. Our model is like a detective trying to find patterns and relationships between the features and the house prices.

During the training process, the regression algorithm analyzes the labeled data, looking for correlations between the input features and the corresponding house prices. It learns how changes in the features, like the number of bedrooms or the square footage, are related to changes in the house prices.

Once the model is trained, we can provide it with the features of a new, unseen house (e.g., the number of bedrooms, square footage, location), and it will predict the estimated price for that house based on what it learned from the training data.

This is called supervised learning. We knew how much each house sold for, so in other words, we knew the answer to the problem and could work backwards from there to figure out the logic.

To build ourapp, we feed our training data about each house into your machine learning algorithm. The algorithm is trying to figure out what kind of math needs to be done to make the numbers work out.

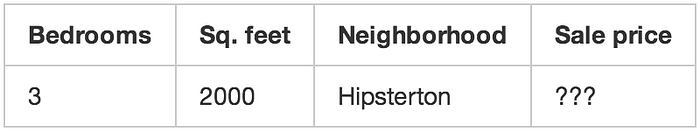

This kind of like having the answer key to a math test with all the arithmetic symbols erased:

Special Note: The above picture is taken from this blog.

From this, can you figure out what kind of math problems were on the test? You know you are supposed to “do something” with the numbers on the left to get each answer on the right.

In supervised learning, you are letting the computer work out that relationship for you. And once you know what math was required to solve this specific set of problems, you could answer to any other problem of the same type!

Basically understanding the exact generalised equation with the necessary values.

Regression algorithms can take different forms, such as linear regression, polynomial regression, or more complex algorithms like support vector regression or random forest regression. Each algorithm has its own way of capturing the relationships between the features and the continuous output.

Will discuss the differnt forms in more detail in another blog.

Regression is widely used in various domains, such as finance (predicting stock prices), economics (forecasting sales), healthcare (estimating patient health metrics), and many others.

Let’s understand Classification Algorithm with one easy example.

Imagine you are a veterinarian, and you want to develop a model that can classify images of animals as either “Cats” or “Dogs” based on their visual features.

To train this classification model, you collect a dataset of labeled images of cats and dogs. Each image is associated with the correct label indicating whether it contains a cat or a dog.

Now, let’s say you have various features extracted from these images, such as the presence of pointy ears, the shape of the snout, and the size of the eyes, shape etc. These features serve as inputs to the classification model.

During the training process, the classification algorithm analyzes the labeled images, looking for patterns and features that distinguish cats from dogs. It learns that cats tend to have pointy ears, a slender snout, and relatively larger eyes, while dogs often have floppy ears, a broader snout, and proportionally smaller eyes, cats tend to be smaller than most dog breeds.

As the model continues to learn from more labeled examples, it becomes adept at recognizing these distinguishing features and building a decision-making process. It becomes like your furry friend identifier, capable of determining whether a new image is more likely to be a cat or a dog based on the visual characteristics it has learned.

What is this? Cat or Dog?⬇️⬇️

Once the model is trained, you can take a new image of an animal, provide it as input to the model, and it will predict whether the animal in the image is a cat or a dog.

Classification problems involving animals can have various applications, such as wildlife monitoring, pet identification, or even facial recognition for animals.

Unsupervised Learning

Overview

Unsupervised learning is a category of machine learning where the algorithm learns patterns and structures in the data without explicit labels or guidance. Unlike supervised learning, there are no predefined correct answers or labeled examples provided to the algorithm.

Imagine you are a group of explorers embarking on a journey to an uncharted land. You have no map, no guide, and no specific instructions on what to expect. Your task is to explore this new territory, understand its natural structure, and uncover any hidden patterns or groups that exist within it.

This is similar to unsupervised learning in machine learning. Unsupervised learning is like exploring the unknown in your data without any predefined answers or labeled examples. It’s about discovering the inherent patterns and structures that exist naturally in the data, just as you discover the landscape and features of the new land.

In unsupervised learning, we use algorithms that delve into the data and try to find meaningful relationships or clusters. These algorithms are like your tools for exploration, helping you uncover the hidden treasures within the data.

Categories of Unsupervised Learning

The unsupervised learning model is classified into four different categories of algorithms, which group data based on similarities or relationships among variables:

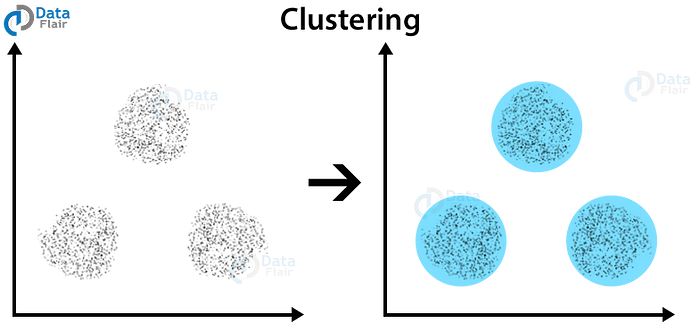

1. Clustering: Clustering algorithms group data points together based on their similarities or relationships. The algorithm looks for patterns or structures in the data and identifies natural clusters where data points within the same cluster are more similar to each other than to those in other clusters. It helps in discovering meaningful groups or segments within the data. For example, clustering can be used to segment customers into distinct groups based on their purchasing behavior or to group similar documents together in text analysis.

To put it simply, It’s like finding groups of similar objects in the data without being told which objects belong together. Just like when you explore the new land and notice similarities in the plants, animals, or terrain, clustering algorithms identify groups of data points that share common characteristics or properties.

2. Association: Association algorithms analyze the relationships and associations between variables in the data. They identify patterns or rules that indicate the co-occurrence or dependency between different attributes. For example, in a retail setting, an association algorithm can identify that customers who purchase item A are also likely to purchase item B. This can be useful in recommendation systems or market basket analysis.

3. Anomaly detection: Anomaly detection algorithms focus on identifying unusual or abnormal data points or patterns that deviate from the norm. These algorithms learn the normal behavior of the data and detect any instances that significantly differ from the expected patterns. Anomaly detection is used in various domains, such as fraud detection, network intrusion detection, or equipment failure prediction.

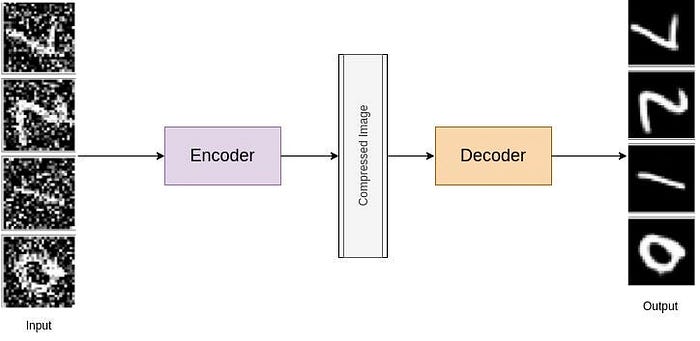

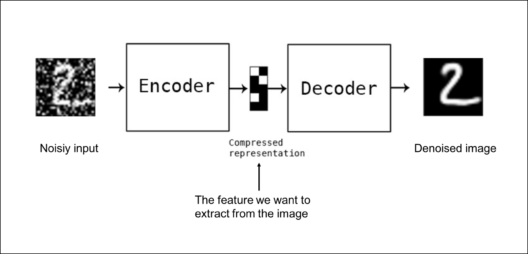

4. Artificial neural networks (Autoencoders): Autoencoders are a type of artificial neural network used in unsupervised learning. They are designed to learn efficient representations or compressions of the input data. The autoencoder takes the input data, compresses it into a lower-dimensional code (encoder), and then reconstructs the input data from that code (decoder). By learning to reconstruct the input data, the autoencoder can remove noise or extract meaningful features. It can be used for tasks such as denoising images, feature extraction, or dimensionality reduction.

Let’s understand Clustering with some easy examples.

Story time again!!😉

Imagine you are a detective investigating a mysterious crime scene with scattered evidence. Your task is to find any hidden connections among the pieces of evidence to uncover the truth. Well, clustering is like being that detective in the realm of data!

Clustering is the process of grouping similar data points together based on their characteristics or features. It’s like solving a giant puzzle where you’re trying to find the pieces that fit together naturally. Let’s take an example to illustrate this concept.

Love solving puzzles??

Imagine you are given a bag of colorful puzzle pieces, and your mission is to group them based on their shapes and colors. You notice that some pieces have similar shapes, while others share common colors. So, you start organizing the pieces into different piles based on their shape or color similarities.

In the world of machine learning, clustering algorithms work in a similar way. They analyze the features or characteristics of the data and group similar data points together. The algorithm examines the patterns, distances, or relationships among the data points to determine which points belong to the same cluster.

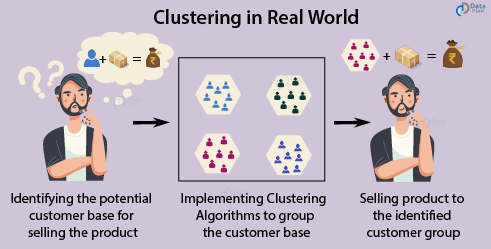

Ever thought what is the use of clustering in real world ?🤔

Consider a marketing scenario where you have customer data with various attributes such as age, income, and purchasing behavior. By applying clustering techniques, you can identify distinct customer segments with similar traits. This can help businesses target their marketing strategies more effectively and tailor their products or services to specific customer groups.

So, in a nutshell, clustering in machine learning is like being a detective, organizing puzzle pieces, or discovering hidden communities. It helps us find similarities, group data points together, and uncover valuable insights from unlabeled data.

Let’s understand Association with some easy examples.

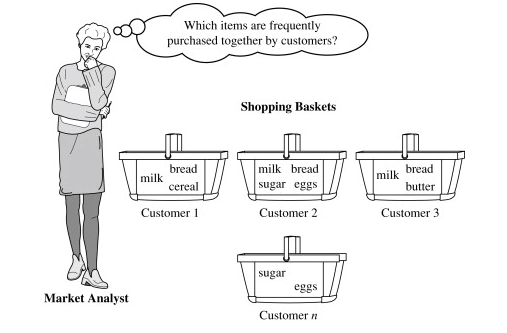

Association in unsupervised learning is all about discovering relationships and dependencies between different attributes or variables in a dataset. It helps us understand how certain attributes are often associated or occur together. This knowledge can then be used for making predictions or uncovering additional information.

Let’s consider a simple example of association. Imagine you have a transaction dataset from a grocery store, containing information about customer purchases. By applying association analysis, you can discover interesting associations between different products that are commonly bought together. For instance, the analysis might reveal that customers who buy bread are highly likely to also purchase butter and milk. This association can be leveraged to improve sales strategies, such as placing bread, butter, and milk in close proximity to encourage additional purchases.

Real life Use Cases?

A very relatable use case would be👇

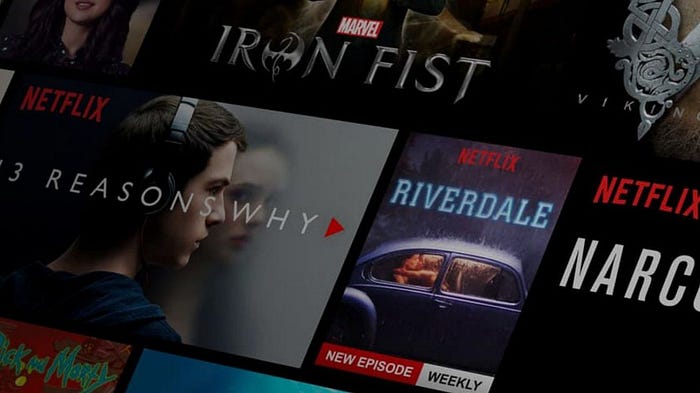

Recommender Systems

Recommender systems use association analysis to provide personalized recommendations to users. By examining past user behavior or preferences, the system can identify items that are commonly associated with the user’s interests.

For example, streaming platforms suggest movies or songs based on the associations between users with similar tastes.

apart from that, one good example would be -

Market Basket Analysis

Market basket analysis is a classic application of association in retail. By analyzing customer purchase data, retailers can identify frequently co-occurring items and create targeted promotions or product placements. For instance, if customers often buy chips and salsa together, the store may offer a discount when purchasing both items to encourage additional sales.

Let’s understand Anomaly Detection with some easy examples.

Anomaly detection in machine learning is like having an intelligent assistant that helps you spot those abnormal behaviors amidst a sea of regular patterns.

Anomaly detection, also known as outlier detection, focuses on finding data points or patterns that are significantly different from the majority of the data. It helps us identify rare occurrences, unexpected behaviors, or anomalies that may indicate potential issues or anomalies of interest.

Let’s consider some real-life examples

Let’s understand how this works with a simple analogy.

Imagine you are a security guard responsible for monitoring people entering and exiting a building. You observe the flow of individuals throughout the day and become familiar with the regular patterns of movement. Most people enter through the main entrance, follow specific paths, and leave after some time. These behaviors represent the normal or expected patterns.

Now, if someone enters through a side door, moves in an erratic manner, or behaves suspiciously, you would consider it an anomaly. This abnormal behavior stands out from the regular patterns you’ve observed. Similarly, in network intrusion detection, anomaly analysis identifies unusual activities within network traffic.

Network Intrusion Detection:

By analyzing historical network traffic data, anomaly detection algorithms learn the normal patterns of network behavior, such as the types of communication, data volume, and typical traffic flow. When a new network event occurs that deviates significantly from the established patterns, it is flagged as an anomaly.

For example, if there is a sudden spike in data traffic from a specific IP address or unusual communication patterns between network nodes, the anomaly detection system will detect these deviations. It alerts network administrators or security personnel to investigate the event further as it may indicate a potential network intrusion or malicious activity.

Fraud Detection:

Imagine you are a credit card company, and you want to detect fraudulent transactions.

Anomaly detection algorithms can analyze historical transaction data and learn the normal spending patterns of customers. When a transaction occurs that deviates significantly from the established patterns, such as an unusually large purchase or a transaction from an unfamiliar location, it is flagged as a potential anomaly and subjected to further investigation or validation.

Let’s understand Artificial neural networks with some easy examples.

Artificial neural networks, specifically autoencoders, are powerful tools in machine learning that can learn to extract meaningful representations of input data. Let’s understand autoencoders with a simple and intuitive example.

Imagine you are an artist who wants to create a simplified representation of a complex image. You start with a high-resolution image and want to transform it into a lower-dimensional representation while retaining the important features. Autoencoders can help you achieve this.

Componenets

In this scenario, the autoencoder consists of two main components: an encoder and a decoder. The encoder takes the high-resolution image as input and compresses it into a lower-dimensional representation, known as the latent space or code. The decoder then takes this compressed representation and reconstructs the image, attempting to recreate it as faithfully as possible.

How it works ?

The beauty of autoencoders lies in their ability to learn a compact representation of the input data. By training the autoencoder on a dataset of high-resolution images, it learns to capture the essential features of the images in the latent space. This compressed representation can then be used for various purposes, such as image compression, denoising, or even generating new images.

For example, let’s say you train an autoencoder on a dataset of human faces. The encoder part of the autoencoder learns to extract key facial features, such as eyes, nose, and mouth, from the high-resolution input images. It compresses this information into a lower-dimensional code. The decoder part of the autoencoder takes this code and reconstructs the face by generating a new image that resembles the original input.

Real Life Use Case

Image Compression:

Given a high-resolution image, the encoder compresses it into a smaller code. This code represents a compressed representation of the image. By discarding some unnecessary information, the image size is reduced while still retaining the key features. The decoder can then reconstruct the image from this compressed representation.

Denoising Image

Autoencoders can also be used for denoising images. By training the autoencoder on a dataset of noisy images and their clean counterparts, the encoder learns to extract the underlying clean features from the noisy input. It compresses the noisy image into a code and the decoder reconstructs a denoised version of the image based on this code.

Importance and Impact of Machine Learning

1. Automation and Efficiency: ML enables automation of repetitive tasks and processes, leading to increased efficiency and productivity. It can handle large volumes of data, identify patterns, and make predictions or decisions much faster than humans.

2. Personalization: ML algorithms have transformed how businesses interact with customers by providing personalized recommendations, tailored marketing campaigns, and customized user experiences. ML-powered recommender systems, chatbots, and virtual assistants have become integral to enhancing customer satisfaction and engagement.

3. Healthcare Advancements: Machine learning is revolutionizing healthcare by enabling early disease detection, accurate diagnosis, and personalized treatment plans. ML algorithms can analyze medical images, predict patient outcomes, and assist in drug discovery, leading to improved patient care and outcomes.

4. Financial Services: ML algorithms are widely used in the finance industry for fraud detection, credit scoring, algorithmic trading, and risk assessment. ML models can analyze vast amounts of financial data in real-time, identify anomalies, and make rapid decisions, enhancing security and efficiency in financial processes.

5. Smart Manufacturing and Supply Chain Optimization: ML algorithms help optimize manufacturing processes, predict equipment failures, and enhance supply chain management. Predictive maintenance and demand forecasting based on ML models reduce downtime, improve efficiency, and minimize costs.

6. Transportation and Autonomous Vehicles: Machine learning plays a crucial role in autonomous vehicles by enabling real-time perception, decision-making, and control. ML algorithms process sensor data to detect objects, predict behaviors, and ensure safe and efficient navigation.

7. Research and Innovation: ML has significantly accelerated research and innovation across various domains. It aids in data analysis, pattern recognition, and simulations, enabling scientists and researchers to make breakthrough discoveries in areas such as genomics, climate science, and drug development.

8. Improved Decision-Making: ML models assist in decision-making by providing data-driven insights and predictions. From business forecasting to risk assessment, ML algorithms help businesses and organizations make informed decisions, leading to better outcomes and competitive advantages.

In conclusion, machine learning is a powerful and transformative technology that has revolutionized various industries, enabling automation, personalization, and data-driven decision-making. Its impact on society and the economy continues to grow, with new advancements and applications being discovered regularly.

Credits: Sayantan Samanta

Comentarios

Publicar un comentario